Gpt4All Prompt Template

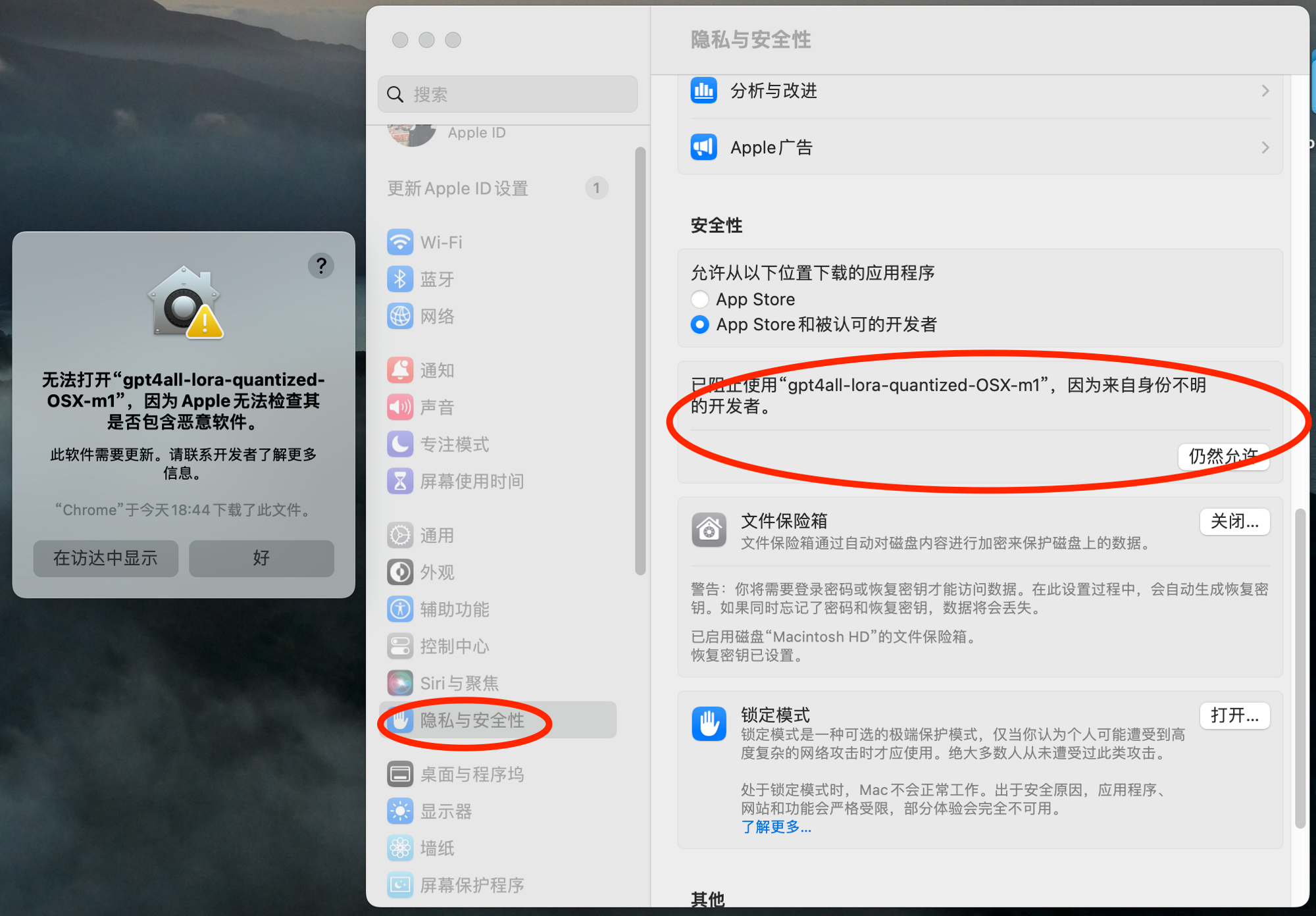

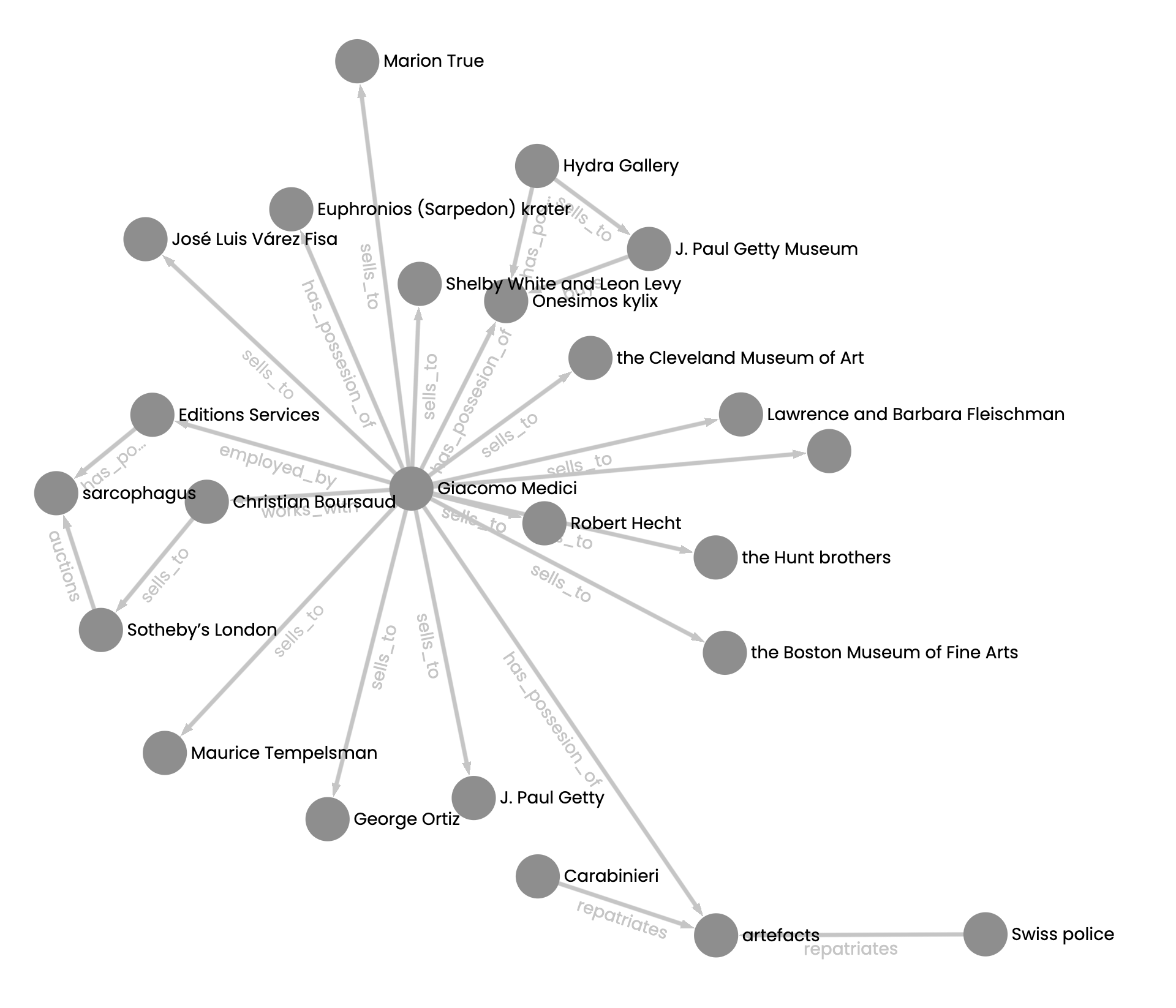

Gpt4All Prompt Template - Web from langchain.llms import gpt4all from langchain import prompttemplate, llmchain # create a prompt template where it contains some initial instructions # here we say our. Create (model = model, prompt =. Web i have setup llm as gpt4all model locally and integrated with few shot prompt template using llmchain. Web how do i change the prompt template on the gpt4all python bindings? Kiraslith commented on may 27, 2023. Then, define a fixed width for the groupitem headers using the expander's minwidth property. Kiraslith opened this issue on may 27, 2023 · 1 comment. Web prompt_template = f### instruction: The few shot prompt examples are simple few shot prompt template. Open () prompt = chat_prompt. Use the prompt function exported for a value. Our hermes (13b) model uses. Web i have setup llm as gpt4all model locally and integrated with few shot prompt template using llmchain. This is the raw output from model. Kiraslith opened this issue on may 27, 2023 · 1 comment. Prompt = prompttemplate(template=template, input_variables=[question]) load model: Web ) ] chat_prompt = chatprompttemplate. Web i have setup llm as gpt4all model locally and integrated with few shot prompt template using llmchain. Format ()) m = gpt4all () m. Web each prompt passed to generate() is wrapped in the appropriate prompt template. Web we will be using fireworks.ai's hosted mistral 7b instruct model (opens in a new tab) for the following examples that show how to prompt the instruction tuned mistral 7b model. Our hermes (13b) model uses. I've researched a bit on the topic, then i've tried with some variations of prompts (set them in: This is the raw output from. Format ()) m = gpt4all () m. Web prompt_template = f### instruction: Create (model = model, prompt =. Tokens = tokenizer(prompt_template, return_tensors=pt).input_ids.to(cuda:0) output =. Then, define a fixed width for the groupitem headers using the expander's minwidth property. Web from langchain.llms import gpt4all from langchain import prompttemplate, llmchain # create a prompt template where it contains some initial instructions # here we say our. Web we will be using fireworks.ai's hosted mistral 7b instruct model (opens in a new tab) for the following examples that show how to prompt the instruction tuned mistral 7b model. Then, define a. Model results turned into.gexf using another series of prompts and then visualized in. Prompt = prompttemplate(template=template, input_variables=[question]) load model: Open () prompt = chat_prompt. We have a privategpt package that effectively addresses. Tokens = tokenizer(prompt_template, return_tensors=pt).input_ids.to(cuda:0) output =. Prompt = prompttemplate(template=template, input_variables=[question]) load model: Format () print (prompt) m. Model results turned into.gexf using another series of prompts and then visualized in. If you pass allow_download=false to gpt4all or are using a model that is not from the official. Web first, remove any margins or spacing that may affect the alignment of the columns. The few shot prompt examples are simple few shot prompt template. I've researched a bit on the topic, then i've tried with some variations of prompts (set them in: We have a privategpt package that effectively addresses. Format () print (prompt) m. Then, define a fixed width for the groupitem headers using the expander's minwidth property. Model results turned into.gexf using another series of prompts and then visualized in. Web first, remove any margins or spacing that may affect the alignment of the columns. Web prompt the model with a given input and optional parameters. If you're using a model provided directly by the gpt4all downloads, you should use a prompt template similar to the one. Format () print (prompt) m. Web first, remove any margins or spacing that may affect the alignment of the columns. Tokens = tokenizer(prompt_template, return_tensors=pt).input_ids.to(cuda:0) output =. The few shot prompt examples are simple few shot prompt template. Then, define a fixed width for the groupitem headers using the expander's minwidth property. Web each prompt passed to generate() is wrapped in the appropriate prompt template. You probably need to set the. Web i have setup llm as gpt4all model locally and integrated with few shot prompt template using llmchain. Then, define a fixed width for the groupitem headers using the expander's minwidth property. Model results turned into.gexf using another series of prompts and then visualized in. Web from langchain.llms import gpt4all from langchain import prompttemplate, llmchain # create a prompt template where it contains some initial instructions # here we say our. The few shot prompt examples are simple few shot prompt template. Tokens = tokenizer(prompt_template, return_tensors=pt).input_ids.to(cuda:0) output =. Prompt = prompttemplate(template=template, input_variables=[question]) load model: Format () print (prompt) m. If you're using a model provided directly by the gpt4all downloads, you should use a prompt template similar to the one it defaults to. Format ()) m = gpt4all () m. Our hermes (13b) model uses. This is the raw output from model. Let's think step by step. Kiraslith opened this issue on may 27, 2023 · 1 comment.Improve prompt template · Issue 394 · nomicai/gpt4all · GitHub

gpt4all保姆级使用教程! 不用联网! 本地就能跑的GPT

![Chat with Your Document On Your Local Machine Using GPT4ALL [Part 1]](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/7dd48876-9766-4a93-930e-a92db76d02c5_750x540.png)

Chat with Your Document On Your Local Machine Using GPT4ALL [Part 1]

nomicai/gpt4alljpromptgenerations at main

GPT4All How to Run a ChatGPT Alternative For Free in Your Python

A perfect Prompt Template ChatGPT, Bard, GPT4 Prompt within 24 Hours

Gpt4All Prompt Template

Further Adventures with LLMGPT4All and Templates XLab

How to give better prompt template for gpt4all model · Issue 1178

Additional wildcards for Prompt Template For GPT4AllChat · Issue

We Have A Privategpt Package That Effectively Addresses.

Web First, Remove Any Margins Or Spacing That May Affect The Alignment Of The Columns.

Create (Model = Model, Prompt =.

Web We Will Be Using Fireworks.ai's Hosted Mistral 7B Instruct Model (Opens In A New Tab) For The Following Examples That Show How To Prompt The Instruction Tuned Mistral 7B Model.

Related Post: